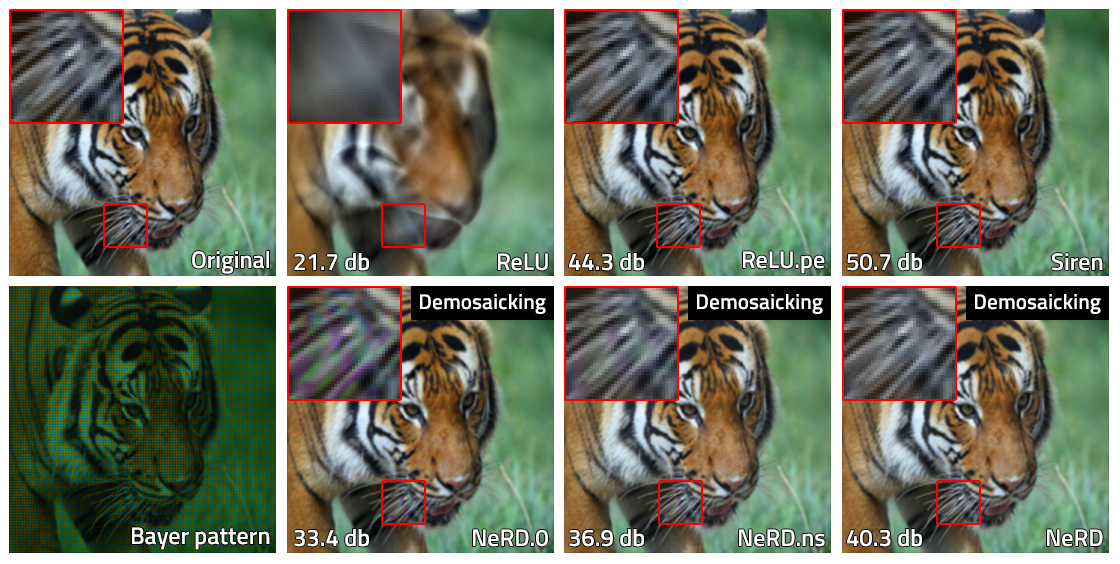

Digital cameras capture raw image data through color filter arrays, requiring demosaicking techniques to reconstruct full-color images from incomplete Bayer patterns. Traditional model-based methods, such as bilinear interpolation and frequency-based filtering, struggle to produce high-quality results, while deep learning approaches, including convolutional neural networks (CNNs) and Transformers, demand substantial computational resources. Our project introduces NeRD (Neural Field-based Demosaicking), a novel approach that leverages implicit neural representations to perform demosaicking with remarkable accuracy and efficiency. NeRD encodes image information into a coordinate-based neural network, enabling precise, spatially consistent color reconstruction. By incorporating prior knowledge through a hybrid ResNet–U-Net encoder, our method significantly improves image quality and closes the gap between CNN-based and Transformer-based demosaicking models.

|

Implicit Neural Representations for Image Reconstruction

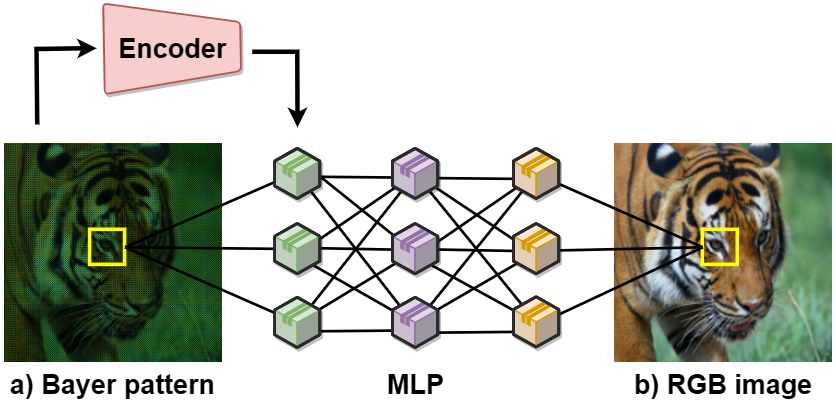

NeRD is built upon the emerging paradigm of Neural Fields (NF), which represent images as continuous functions mapped by a multi-layer perceptron (MLP). Unlike conventional CNNs that operate on fixed grid structures, NeRD models image color distributions in a fully differentiable and resolution-independent manner. The architecture features a coordinate-based MLP enhanced with sine activation functions (SIRENs), ensuring smooth and high-frequency image reconstruction. To further improve spatial coherence and color fidelity, an encoder network extracts local and global feature encodings from input Bayer patterns, conditioning the MLP to generate realistic full-color images. By leveraging these implicit neural representations, NeRD outperforms traditional demosaicking techniques while maintaining computational efficiency.

Performance and Comparison with State-of-the-Art Methods

Extensive experiments on benchmark datasets demonstrate that NeRD outperforms traditional model-based methods such as Malvar and Menon interpolation and surpasses CNN-based DeepDemosaick techniques in terms of reconstruction accuracy and fine detail preservation. While Transformer-based models like RSTCANet achieve slightly higher peak signal-to-noise ratios (PSNR), NeRD excels in preventing over-smoothing and maintaining texture integrity. The system is evaluated using industry-standard image quality metrics, including PSNR and Structural Similarity Index (SSIM), revealing a significant improvement over prior deep learning models. Additionally, an ablation study highlights the impact of key architectural components, such as skip connections in the MLP and local feature encoding in the ResNet-U-Net encoder, both of which contribute to NeRD's superior image reconstruction capabilities.

|

Future Directions and Impact on Image Processing

NeRD represents a significant step forward in the field of computational photography and image processing. By integrating neural fields into the demosaicking pipeline, this approach paves the way for future research in neural representations for image enhancement, super-resolution, inpainting, and video frame interpolation. Future improvements may include Transformer-based enhancements in the encoder and fine-tuning loss functions specific to Bayer pattern reconstructions. NeRD's ability to generate high-quality color images with lower computational overhead makes it a promising solution for camera manufacturers, mobile imaging applications, and advanced AI-driven photography workflows. By advancing demosaicking through neural fields, our project bridges the gap between traditional image processing and cutting-edge deep learning methods, opening new possibilities for next-generation vision systems.

Contact person: Filip Šroubek