Images captured by sensors are often incomplete or degraded, and we need to reconstruct them into a usable form. A prime example is demosaicing – reconstructing a full-color photograph from the partial color data recorded by a camera’s Bayer filter. Traditional algorithms, and even standard deep neural networks, struggle with this task and can introduce artifacts, especially when the input suffers from other issues like blurring. Our project addresses these challenges with advanced AI methods that leverage innovative neural network representations to produce sharper and more faithful images from raw inputs.

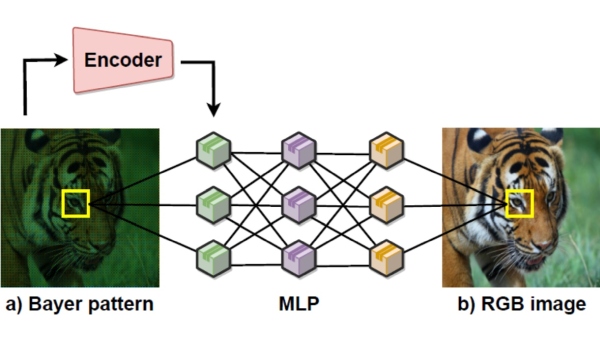

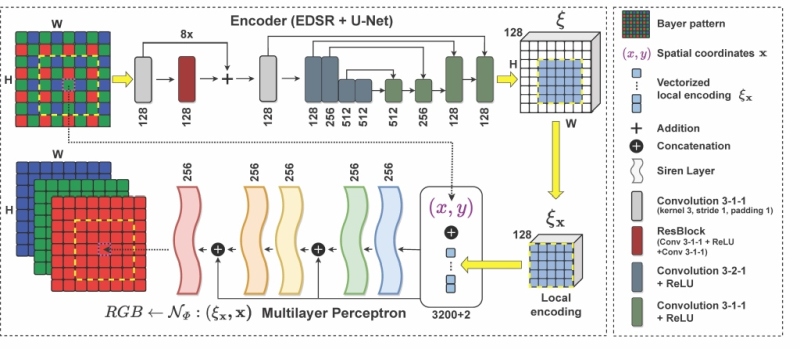

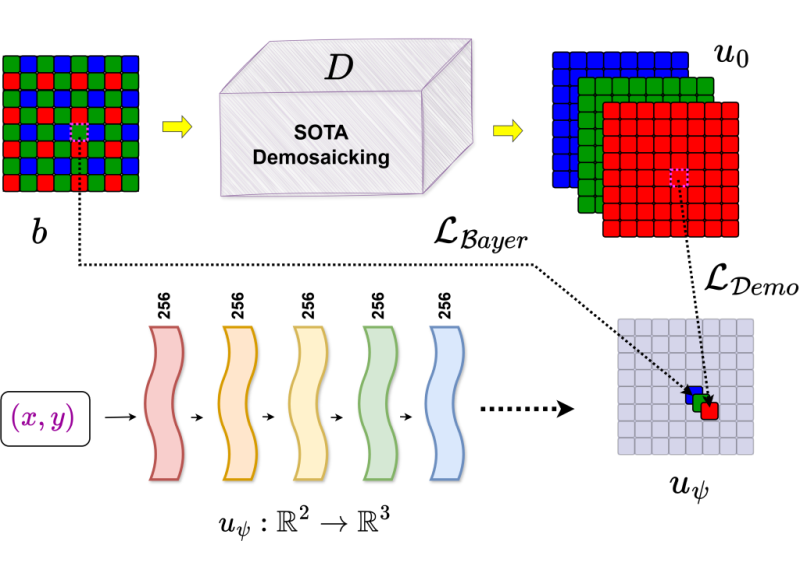

At the heart of our approach is the use of implicit neural representations. Unlike conventional convolutional networks that operate on a fixed pixel grid, an implicit representation treats an image as a continuous mathematical function modeled by a neural network. In our method NeRD (Neural field-based Demosaicking), the image is represented by a coordinate-based neural network (an MLP) that directly generates color values for any given pixel coordinates. This MLP is coupled with an encoder network which injects learned features from training data, ensuring that even fine details and textures are accurately reconstructed. Thanks to this design, NeRD can achieve reconstruction quality on par with state-of-the-art solutions – in fact, it outperforms traditional methods and previous CNN-based approaches, coming close to the performance of modern Transformer-based models.

Diagram of the NeRD network architecture.

While NeRD is trained on a large set of examples, our follow-up method INRID (Implicit Neural Representation for Image Demosaicking) takes the idea even further – it can learn from just a single image. INRID is a self-adaptive approach that optimizes a coordinate-based neural network for each individual photograph. In practice, we can feed an arbitrary raw sensor image into the system, and INRID will fine-tune itself to fill in that image’s missing colors and even suppress additional imperfections like blur or noise. This happens without any extra training data – the network effectively learns the features of the image on the fly, directly from the input. Such a self-supervised strategy allows INRID to handle situations that deviate from typical training conditions, delivering reliably high-quality results even for images with unusual defects.

Our work builds on a broader effort to merge modern AI with classical image processing techniques. For example, an earlier project D3Net (Deep Demosaicking, Deblurring and Deringing Network) combined physics-based models with neural networks through a technique called deep unrolling. This enabled a single network to simultaneously remove blur and perform demosaicing, while requiring only a minimal amount of training data. Another method, Dual-Cycle, applied cycle-consistent learning (CycleGAN) for self-supervised reconstruction in fluorescence microscopy – two neural networks learned to transform orthogonal microscope views into each other, making it possible to fuse two perpendicular images into one high-resolution 3D volume without any paired training samples. These experiences paved the way for NeRD and INRID, which represent the next step forward by utilizing implicit representations. Together, our methods signal a shift toward AI solutions that are both data-efficient and highly adaptable to new image reconstruction problems.

In essence, we are teaching neural networks not just to enhance images, but to re-imagine and re-create them from the raw data – opening the door to a new generation of intelligent image processing. By harnessing these advanced neural approaches, our project demonstrates how AI can more effectively solve long-standing image reconstruction challenges, whether in a consumer camera or a scientific instrument.

INRID schematic: A self-adaptive approach that fine-tunes a neural network from a raw image to automatically reconstruct missing colors and remove blur.

Contact person: Tomáš Kerepecký

Related Publication:

- KEREPECKÝ, Tomáš; ŠROUBEK, Filip. D3Net: Joint demosaicking, deblurring and deringing. In: 25th International Conference on Pattern Recognition (ICPR). IEEE, 2021, pp. 1–8.

- KEREPECKÝ, Tomáš; LIU, Jiaming; NG, Xue W.; PISTON, David W.; KAMILOV, Ulugbek S. Dual-Cycle: Self-Supervised Dual-View Fluorescence Microscopy Image Reconstruction using CycleGAN. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023.

- KEREPECKÝ, Tomáš; ŠROUBEK, Filip; NOVOZÁMSKÝ, Adam; FLUSSER, Jan. NeRD: Neural field-based demosaicking. In: 2023 IEEE International Conference on Image Processing (ICIP). IEEE, 2023, pp. 1735–1739.

- KEREPECKÝ, Tomáš; ŠROUBEK, Filip; FLUSSER, Jan. Implicit Neural Representation for Image Demosaicking. Digital Signal Processing, 2025, 159: 105022.