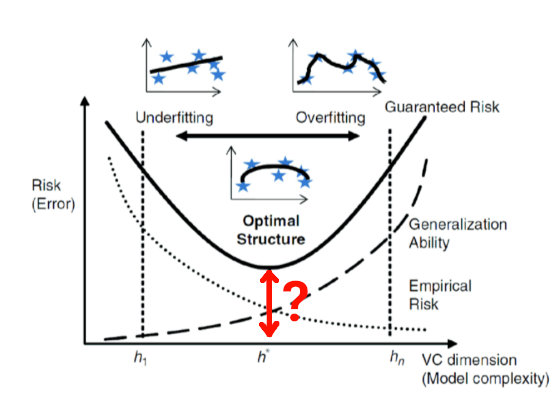

Abstract: This overview lecture introduces the Probably Approximately Correct (PAC) learning model. The model utilizes essential overall training and testing dataset characteristics, known as the Vapnik-Chervonenkis dimension. The audience will be introduced to the basic properties of these concepts. At the same time, the explanation will focus on using the PAC model for estimating the appropriate size of datasets for artificial neural learning networks.

Who: František Hakl, Ústav Informatiky AV ČR

When: 10:00 a.m. Friday, April 25

Where: The session will occur physically at the Institute of Information Theory and Automation (UTIA) in room 45 (café). For directions to the institute, please refer to the following link: https://zoi.utia.cas.cz/index.php/contact

Language: Czech (if you require English, please let us know in advance)

Zoom: https://cesnet.zoom.us/j/95065281700

About the author: František Hakl is a researcher at Institute of Computer Science, the Czech Academy of Sciences. His research interests include machine learning, namely theoretical aspects of deep learning methods, the approximation potential of these methods, lower and upper estimates of the complexity of their architectures and estimates the number of learning patterns guaranteeing predefined accuracy of learning. He uses these methods in detection of physical processes in high energy physics. He designed a specific neural network that allows extremely fast data processing suitable for online evaluation of very fast physical phenomenons.